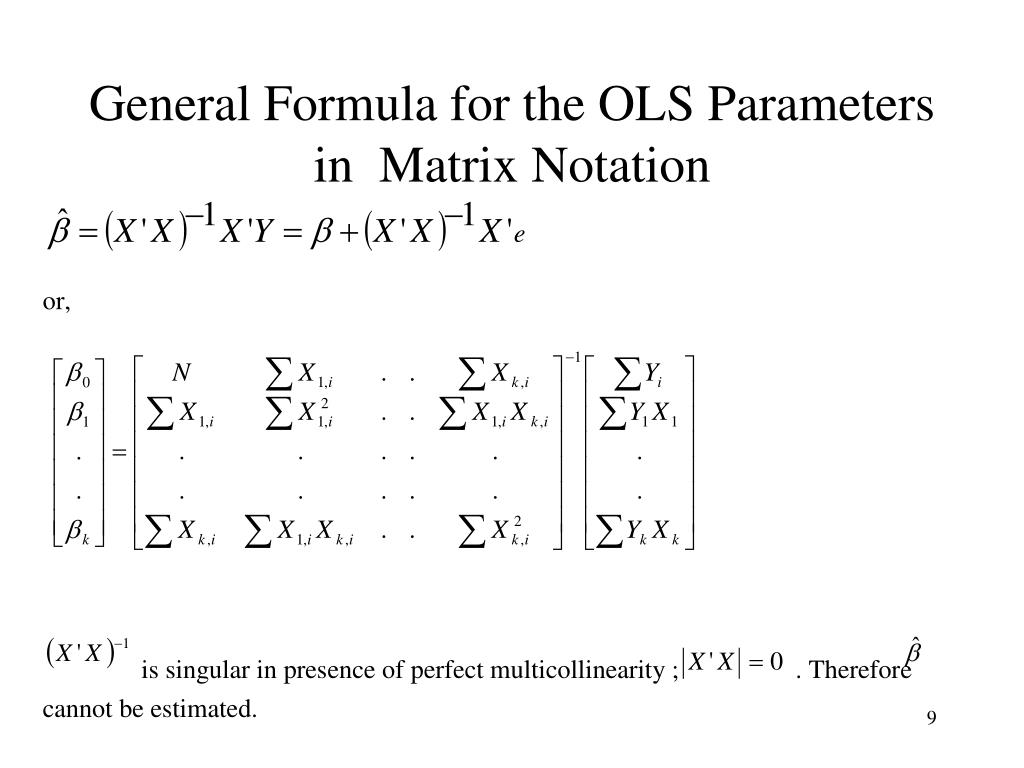

Ols Matrix Form - We present here the main ols algebraic and finite sample results in matrix form: Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. 1.2 mean squared error at each data point, using the coe cients results in some error of. That is, no column is. The matrix x is sometimes called the design matrix. The design matrix is the matrix of predictors/covariates in a regression: (k × 1) vector c such that xc = 0. For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &.

\[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. 1.2 mean squared error at each data point, using the coe cients results in some error of. We present here the main ols algebraic and finite sample results in matrix form: For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. (k × 1) vector c such that xc = 0. The design matrix is the matrix of predictors/covariates in a regression: That is, no column is. Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. The matrix x is sometimes called the design matrix.

For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. We present here the main ols algebraic and finite sample results in matrix form: Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. (k × 1) vector c such that xc = 0. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. The matrix x is sometimes called the design matrix. The design matrix is the matrix of predictors/covariates in a regression: That is, no column is. 1.2 mean squared error at each data point, using the coe cients results in some error of.

PPT Econometrics 1 PowerPoint Presentation, free download ID1274166

For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. 1.2 mean squared error at each data point, using the coe cients results in some error of. That is, no column is. The matrix x is sometimes called the design matrix..

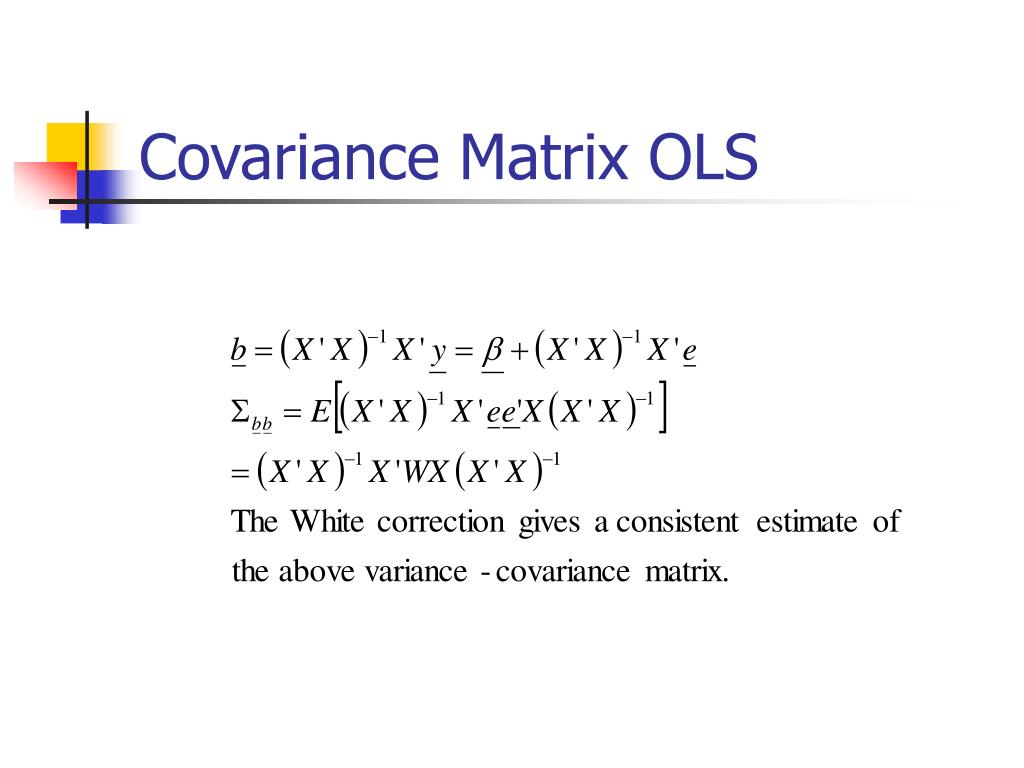

PPT Economics 310 PowerPoint Presentation, free download ID365091

\[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. That is, no column is. (k × 1) vector c such that xc = 0. We present here the main ols algebraic and finite sample results in matrix form: For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 =.

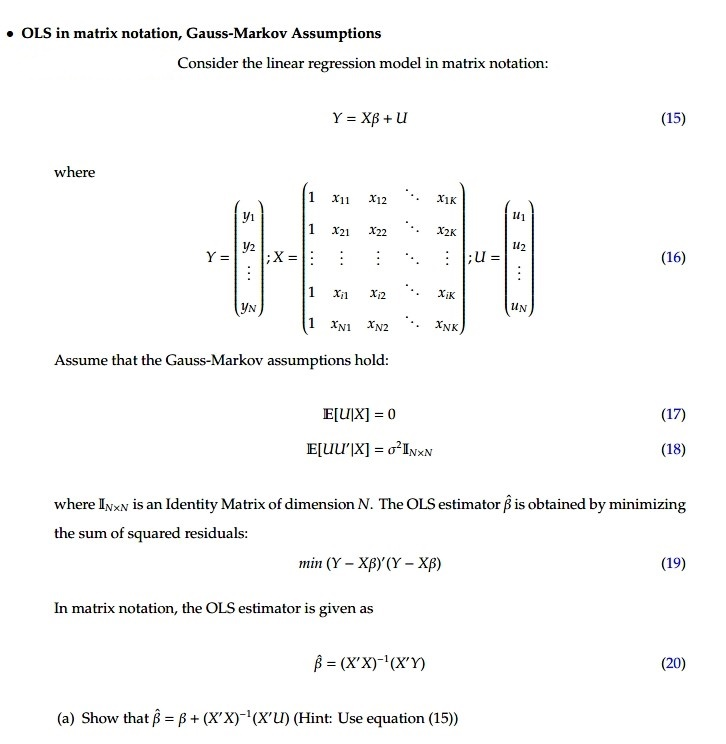

Solved OLS in matrix notation, GaussMarkov Assumptions

1.2 mean squared error at each data point, using the coe cients results in some error of. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. The matrix x is sometimes called the design matrix. For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix.

Vectors and Matrices Differentiation Mastering Calculus for

For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. That is, no column is. We present here the main ols algebraic and finite sample results in matrix form:.

Ols in Matrix Form Ordinary Least Squares Matrix (Mathematics)

The matrix x is sometimes called the design matrix. Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. 1.2 mean squared error at each data point, using the coe cients results in some error of. (k × 1) vector c such.

SOLUTION Ols matrix form Studypool

That is, no column is. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. 1.2 mean squared error at each data point, using the coe cients results in some error of. The matrix x is sometimes called the design matrix. Where y and e are column vectors of length n (the number of observations), x is a.

OLS in Matrix Form YouTube

For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. The matrix x is sometimes called the design matrix. 1.2 mean squared error at each data point, using the coe cients results in some error of. \[ x = \begin{bmatrix} 1.

OLS in Matrix form sample question YouTube

The matrix x is sometimes called the design matrix. For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. We present here the main ols algebraic and finite sample results in matrix form: \[ x = \begin{bmatrix} 1 & x_{11} &.

Linear Regression with OLS Heteroskedasticity and Autocorrelation by

The matrix x is sometimes called the design matrix. We present here the main ols algebraic and finite sample results in matrix form: Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. 1.2 mean squared error at each data point, using.

1.2 Mean Squared Error At Each Data Point, Using The Coe Cients Results In Some Error Of.

\[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. The matrix x is sometimes called the design matrix. Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. We present here the main ols algebraic and finite sample results in matrix form:

(K × 1) Vector C Such That Xc = 0.

The design matrix is the matrix of predictors/covariates in a regression: That is, no column is. For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a.